Joe Armstrong wrote a paper for last year’s HOPL-III conference on the history of Erlang. For some reason, I didn’t get a paper copy of those proceedings, and was too busy to notice their absence. Fortunately Lambda the Ultimate picked it up and supplied links to the paper and the accompanying presentation. Digging into the history of something like Erlang is always fascinating, and Armstrong has done a good job of explaining how Erlang came to be.

Here are a bunch of quotes on topics that I found interesting. I’ve grouped them into categories, but searching the PDF of the paper shouldn’t be hard if you want to know where they originated.

Sources of inspiration

Those familiar with Prolog will not find it at all surprising that Erlang has its roots in Prolog (mostly due to implementation reasons). What I did find interesting was the origin/history/viewpoint on the concurrency model

The explanations of what Erlang was have changed with time:

1. 1986 – Erlang is a declarative language with added concurrency.

2. 1995 – Erlang is a functional language with added concurrency.

3. 2005 – Erlang is a concurrent language consisting of communicating components where the components are written in a functional language.

…

Today we emphasize the concurrency.

Note that the word actor never appears in those descriptions. Indeed, the word actor does not appear in the paper at all. So for all the discussion about Erlang’s usage of the actor model, it appears that the Erlang folks independently duplicated many of the ideas for Hewitt’s Actors. I think that is kind of interesting.

Lisp and Smalltalk are cited as inspirations, but more for the implementation of the runtime than for any features in the language. I came away from the paper with the impression that Armstrong and his colleagues are not paradigm ideologues. They are trying to get the job done.

Reliability

There is a huge emphasis on reliability throughout the paper, supporting Steve Vinoski’s remarks about Erlang. I’l just include a series of quotes, which you can interpret as you see fit:

Erlang was designed for writing concurrent programs that “run forever”

At an early stage we rejected any ideas of sharing resources between processes because of the difficulty of error handling. In many circumstances, error recovery is impossible if part of the data needed to perform the error recovery is located on a remote machine and if that remote machine has crashed.

In order to make systems reliable, we have to accept the extra cost of copying data structures between processes and always make sure that processes have enough data to continue by themselves if other processes crash

The key observation here is to note that the error-handling mechanisms were designed for building fault-tolerant systems, and not merely for protecting from program exceptions. You cannot build a fault-tolerant system if you only have one computer. The minimal conï¬guration for a fault-tolerant system has two computers. These must be conï¬gured so that both observe each other. If one of the computers crashes, then the other computer must take over whatever the ï¬rst computer was doing.

This means that the model for error handling is based on the idea of two computers that observe each other. Error detection and recovery is performed on the remote computer and not on the local computer.

Links in Erlang are provided to control error propagation paths for errors between processes.

It was about this time that we realized very clearly that shared data structures in a distributed system have terrible properties in the presence of failures. If a data structure is shared by two physical nodes and if one node fails, then failure recovery is often im-possible. The reason why Erlang shares no data structures and uses pure copying message passing is to sidestep all the nasty problems of ï¬guring out what to replicate and how to cope with failures in a distributed system.

In our world, we were worried by software failures where replication does not help.

Design criteria

Here are some quotes related the design criteria for Erlang.

Changing code on the fly was an initial key requirement

the notion that three properties of a programming language were central to the efï¬cient operation of a concurrent language or operating system. These were:

1) the time to create a process

2) the time to perform a context switch between two different processes

3) the time to copy a message between two processes

The performance of any highly-concurrent system is dominated by these three times.

One of the earliest design decisions in Erlang was to use a form of buffering selective receive

Pipes were rejected in favor of messages

In the concurrent logic programming languages, concurrency is implicit and extremely ï¬ne-grained. By comparison Erlang has explicit concurrency (via processes) and the processes are coarse-grained.

The ï¬nal strategy we adopted after experimenting with many different strategies was to use per-process stop-and-copy GC. The idea was that if we have many thousands of small processes then the time taken to garbage collect any individual process will be small.

…

Current systems run with tens to hundreds of thousands of processes and it seems that when you have such large numbers of processes, the effects of GC in an individual process are insigniï¬cant.

The BEAM compiler compiled Erlang programs to BEAM instructions.

On functionalness

This next series of quotes will probably make the pure functional language people shake their heads, but i think that it’s important to understand Erlang in contrast with pure functional languages.

Erlang is not a strict side-effect-free functional language but a concurrent language where what happens inside a process is described by a simple functional language.

Behaviors in Erlang can be thought of as parameterizable higher-order parallel processes.

… the status of Erlang as a fully fledged member of the functional family is dubious. Erlang programs are not referentially transparent and there is no system for static type analysis of Erlang programs. Nor is it relational language. Sequential Erlang has a pure functional subset, but nobody can force the programmer to use this subset; indeed, there are often good reasons for not using it.

An Erlang system can be thought of as a communicating network of black boxes.

In the Erlang case, the language inside the black box just happens to be a small and rather easy to use functional language, which is more or less a historical accident caused by the implementation techniques used.

History and Usage

One thing that I was looking for in the paper was more details on how long Erlang had been around (besides before Java), how big the largest programs/systems were, and so forth. Here is what I found.

This history spans a twenty-year period…

(The history starts in 1986)

The largest ever system built in Erlang was the AXD301. At the time of writing, this system has 2.6 millions lines of Erlang code.

…

The AXD301 is written using distributed Erlang. It runs on a cluster using pairs of processors and is scalable up to 16 pairs of processors.

…

In the analysis of the AXD reported in [7], the AXD used 20 supervision trees, 122 client-server models, 36 event loggers and10 ï¬nite-state machines. All of this was programmed by a team of 60 programmers.

…

As regards reliability, the AXD301 has an observed nine-nines reliability [7]—and a four-fold increase in productivity was observed for the development process [31].

The AXD 301 is circa 1998.

Perhaps the most exciting modern development is Erlang for multicore CPUs. In August 2006 the OTP group released Erlang for an SMP.

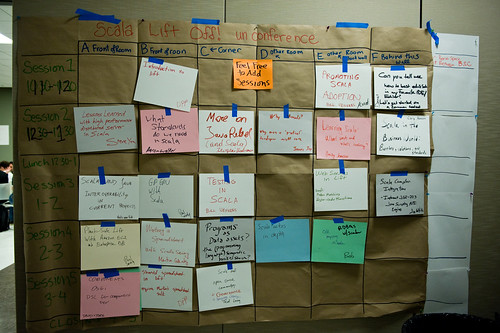

This corroborates something that David Pollak told me at the RedMonk unconference during CommunityOne, namely that SMP support in Erlang had not been there very long. Of course, Erlang was running on systems with 16 physical (pairs, no less) of processings in a distributed environment. So while the runtime might not be that mature on SMP, the overall runtime for concurrency is probably a bit more mature than that. Nonetheless, worthwhile to know the precise facts.

All in all, I found the paper to be a very worthwhile read – (and a nice change from my usual intake of blog posts and tweets). One of my pet peeves about the computer business is the lack of awareness of the history of the field. At least I’ve removed a bit of my own ignorance as relates to Erlang.