Last week I attended the Web 2.0 Summit in San Francisco. The theme this years was “The Data Frame”, an attempt to look at the “Points of Control Theme” from last year through the lens of data.

Data Frame talks

Most of the good data frame stuff was in the short “High Order Bit” and “Pivot” talks. The interviews with big company CEO’s are generally of little value, because CEO’s at large companies have been heavily media trained, and it is rare to get them to say anything really interesting.

Genevieve Bell from Intel posed the question “Who is data and if it were a person what would it be like?” Her answers included:

- Data keeps it real – it will resist being digitized

- Data loves a good relationships – what happens when data is intermediated

- Data has a country (context is important)

- Data is feral (privacy security,etc )

- Data has responsibilities

- Data wants to look good

- Data doesn’t last forever (and shouldn’t in some cases)

One Kings Lane was one of the startups described by Kleiner Perkins’ Aileen Lee. The interesting thing about their presentation was their realtime dashboard of purchasing activity during one of their flash sales events. You can see the demo at 6:03 in the video from the session.

Mary Meeker has moved from Morgan Stanley to Kleiner Perkins, but her Internet Trends presentation is still a tour de force of statistics and trends. It’s interesting to watch how her list of trends is changing over time.

Alyssa Henry from Amazon talked about AWS from the perspective of S3, and her talk was mostly statistics and customer experiences. One of her closing sentences stuck in my mind: “What would you do if every developer in your organization had access to a supercomputer”. Hilary Mason has talked about how people in sitting at home in their pajamas now have access to big data crunching capability. Alyssa’s remark pushes that idea – pushing the thought that access to supercomputing resources is at the same level as access to a personal computer.

TrialPay is a startup in the online payment space. Their interesting twist is that they will provide payment services free of charge, without a transaction fee. They are willing to do this because they collect the data about the payment, and can then use / sell information about payment behaviors and so on (apparently Visa and Mastercard plan to do something similar).

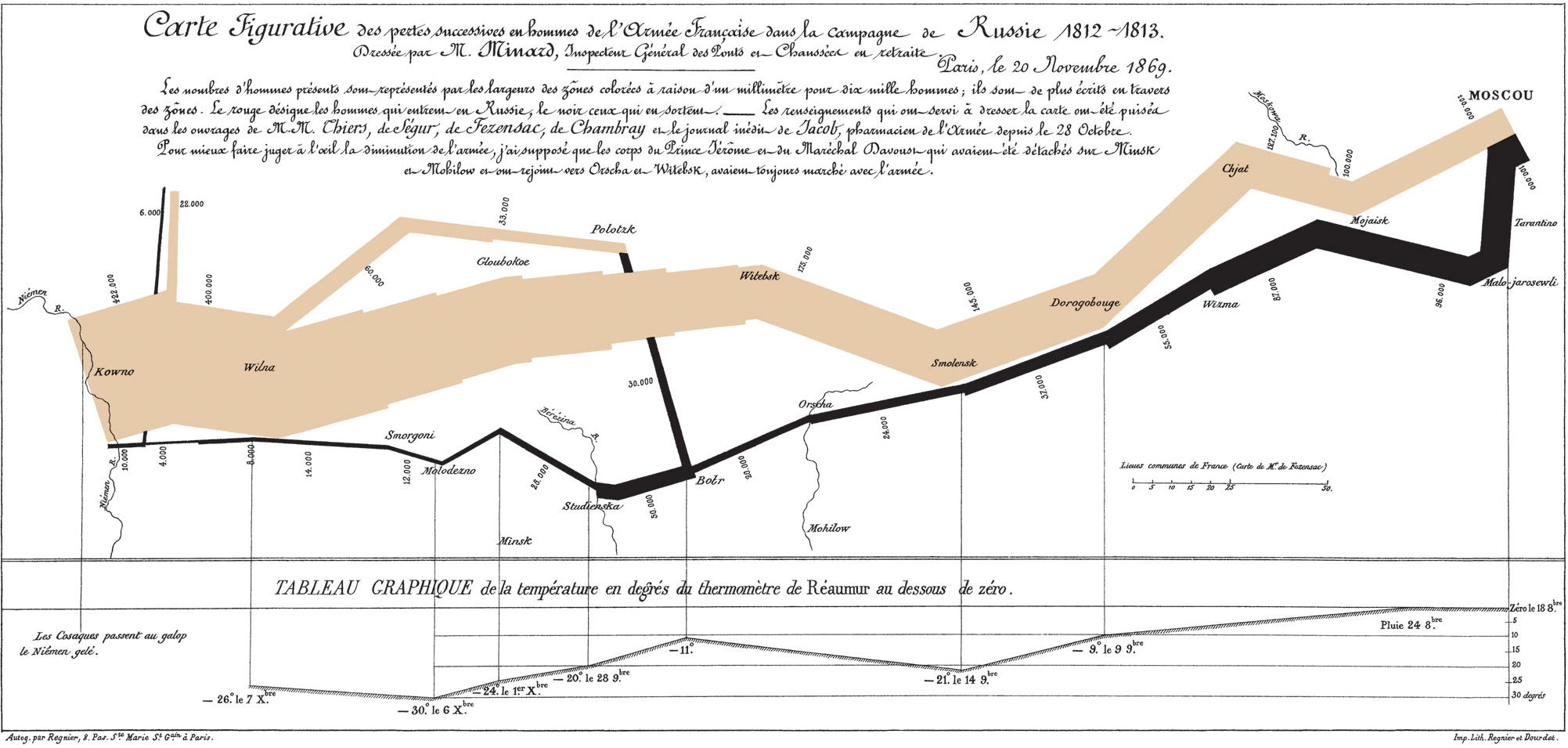

I am not a fan of talks that are product launches or feature launches on existing products, so I was all set to ignore Susan Wojcicki’s talk on Google Analytics. But then I saw this picture in her slides:

Edward Tufte has made this diagram famous, calling it “probably the best statistical graphic ever drawn”. I remember seeing this graphic in one of his seminars and wondering how to bring this type of visualization to a computer. I appreciated the graphic, but I wasn’t sure how many times one would need to graph death marches. The Google Analytics team found a way to apply this visualization to conversion and visitor falloffs. Sure enough, those visualizations are now in my Google Analytics account. Wojcicki also demonstrated that analytics are now being updated in “real time”. Clearly, there’s no need to view instant feedback from analytics as a future item.

Last year there was a panel on education reform. This year, Salman Khan, the creator of the Khan academy spoke. Philosophically I’m in agreement with what Khan is trying to do – provide a way for every student to attain mastery of a topic before moving on. What was more interesting was that he came with some actual data from a whole school pilot of Khan Academy materials. Their data shows that it is possible for children assigned to a remedial math class to jump to the same level as students in an advanced math class. They have a very nice set of analytic tools that work with their videos, which should lead to a more data based discussion of how to help more kids succeed in learning what they need to learn to be successful in life.

Anne Wojcicki (yes, she and Susan are sisters) talked about the work they are doing at 23andMe. She gave an example of a rare form of Parkinson’s disease, where they were able to assemble a sizable number of people with the genetic predisposition, and present that group to medical researchers who are working on treatments for Parkinsons. It was interesting story of online support groups, gene sequencing, and preventative medicine.

It seems worth pointing out that almost all the talks that I listed in this section were by women.

Inspirational Talks

There were some talks which didn’t fit the data frame theme that well, but I found them interesting or inspirational anyway.

Flipboard CEO Mike McCue made an impassioned plea that we learn when to ignore the data, and build products that have emotion in them. He contrasted the Jaguar XJSS and the Honda Insight as products built with emotion and built on data, respectively. He went on to say that tablets are important because the content becomes the interface. He believes that the future of the web is to be more like print, putting content first, because the content has a soul. Great content is about art, art creates emotion, and emotion defies the data. It was a great, thoughtful talk.

Alison Lewis from Coca Cola talked about their new, high tech, internet connected Freestyle soda machine. A number of futuristic internet scenarios seem to involve soda machines, so it was interesting to hear what actual soda companies are doing in this space. The geek in me thinks that the machine is cool, although I rarely drink soft drinks. I went to the Facebook page for the machine to see what was up, and discovered that the only places in Seattle that had them were places where I would never go to eat.

IBM’s David Barnes talked about IBM’s smart cities initiative, which involves instrumenting the living daylights out of city. Power, water, transportation grid, everything. His main points were:

- Cities will have a healthier immune systems. The health web

- City buildings will sense and respond like living organisms – water, power, etc systems

- Car and city buses will run on empty..

- Smarter systems will quench cities thirst and save energy

- Cities will respond to a crisis – even before receiving an emergency call

He left us with a challenge to “Look at the organism that is the city. What can we do to improve and create a smarter city?”. I have questions about how long it would take to actually build a smart city or worse, retrofit an existing city, but this is a challenge type of long term project. I’m glad to see that there are companies out there that are still willing to take that big long view.

Final Thoughts

I really liked the short talk formats that were used this year. It forced many of the speakers to really be crisp and interesting, or at least crisp, and I really liked the volume of what got presented. One thing seems true, that from the engineering audience of Strata to the executive audience at Web 2.0, data and data related topics are at the top of everyone’s mind.

And there in addition to ponies and unicorns, be dragons.