Carl Hewitt, the inventor of the Actor model has a blog.

Category Archives: programming languages

Thoughts on MagLev – VM’s for everybody!

One of the most visible presentations from last weeks RailsConf was Avi Bryant’s demonstration of MagLev, which is a RubyVM that is based on Gemstone’s S/64 VM for Smalltalk. This caused a stir because the micro benchmark performance of MagLev looks really good because S/64 has been out in production for a while and because it appears to have some really interesting features (an OODB, shared VM’s, etc). MagLev is a reminder that the world of production quality, high-performance virtual machines is bigger than many of us remember at times.

I believe that over the next few years we will see a flourishing of virtual machines, as well as languages atop existing virtual machines. Take for example Reia, a Ruby/Pythonesque experiment atop Erlang’s BEAM VM. As we return to a multi language world, we will also necessarily return to a multiple implementation world. Before Java, there were many languages and many implementations of those languages. You could argue that there were probably too many, and I think that’s probably true. I would argue that we need to enter a new period of language and runtime experimentation. A big driver, but not the only driver, for this is the approaching multi-core world. When you don’t know how to solve something, more attempts at solutions is better.

OS X Scripting

John Gruber followed up on Daniel Jalkut’s suggestion that Apple replace AppleScript with Javascript:

I agree with this wholeheartedly. Or maybe even make a clean break and scrap OSA and introduce a new system.

I’ve been talking up the benefits of scripting apps on the Mac since the 1990’s. The sad fact of it is that Apple has never really supported scripting to the level that it deserves. It’s even more important now in the days of a UNIX based MacOS. I have a bunch of scripts that I rely on daily to help me get things done more efficiently. I’d write more of them, but two things hold me back. AppleScript is really a funky language. I’ve partially solved that by switching to using Python (via appscript) to do the scripting, but that’s only half the problem. The other half of the problem is that the API exposed via OSA is also pretty funky. If Apple cleaned all that up, in say, 10.6, I’d be happy to rework my existing body of scripts.

Even if that happened, the big problem is that developer’s don’t really support scripting that well, so a good scripting system overhaul needs to look at making it easy for developers to expose application functionality to scripts. Unless that part happens, improvements in the scripting language, and OSA’s API’s will not be enough to push scripting to the level where it belongs.

Notes on A History of Erlang

Joe Armstrong wrote a paper for last year’s HOPL-III conference on the history of Erlang. For some reason, I didn’t get a paper copy of those proceedings, and was too busy to notice their absence. Fortunately Lambda the Ultimate picked it up and supplied links to the paper and the accompanying presentation. Digging into the history of something like Erlang is always fascinating, and Armstrong has done a good job of explaining how Erlang came to be.

Here are a bunch of quotes on topics that I found interesting. I’ve grouped them into categories, but searching the PDF of the paper shouldn’t be hard if you want to know where they originated.

Sources of inspiration

Those familiar with Prolog will not find it at all surprising that Erlang has its roots in Prolog (mostly due to implementation reasons). What I did find interesting was the origin/history/viewpoint on the concurrency model

The explanations of what Erlang was have changed with time:

1. 1986 – Erlang is a declarative language with added concurrency.

2. 1995 – Erlang is a functional language with added concurrency.

3. 2005 – Erlang is a concurrent language consisting of communicating components where the components are written in a functional language.

…

Today we emphasize the concurrency.

Note that the word actor never appears in those descriptions. Indeed, the word actor does not appear in the paper at all. So for all the discussion about Erlang’s usage of the actor model, it appears that the Erlang folks independently duplicated many of the ideas for Hewitt’s Actors. I think that is kind of interesting.

Lisp and Smalltalk are cited as inspirations, but more for the implementation of the runtime than for any features in the language. I came away from the paper with the impression that Armstrong and his colleagues are not paradigm ideologues. They are trying to get the job done.

Reliability

There is a huge emphasis on reliability throughout the paper, supporting Steve Vinoski’s remarks about Erlang. I’l just include a series of quotes, which you can interpret as you see fit:

Erlang was designed for writing concurrent programs that “run forever”

At an early stage we rejected any ideas of sharing resources between processes because of the difficulty of error handling. In many circumstances, error recovery is impossible if part of the data needed to perform the error recovery is located on a remote machine and if that remote machine has crashed.

In order to make systems reliable, we have to accept the extra cost of copying data structures between processes and always make sure that processes have enough data to continue by themselves if other processes crash

The key observation here is to note that the error-handling mechanisms were designed for building fault-tolerant systems, and not merely for protecting from program exceptions. You cannot build a fault-tolerant system if you only have one computer. The minimal conï¬guration for a fault-tolerant system has two computers. These must be conï¬gured so that both observe each other. If one of the computers crashes, then the other computer must take over whatever the ï¬rst computer was doing.

This means that the model for error handling is based on the idea of two computers that observe each other. Error detection and recovery is performed on the remote computer and not on the local computer.

Links in Erlang are provided to control error propagation paths for errors between processes.

It was about this time that we realized very clearly that shared data structures in a distributed system have terrible properties in the presence of failures. If a data structure is shared by two physical nodes and if one node fails, then failure recovery is often im-possible. The reason why Erlang shares no data structures and uses pure copying message passing is to sidestep all the nasty problems of ï¬guring out what to replicate and how to cope with failures in a distributed system.

In our world, we were worried by software failures where replication does not help.

Design criteria

Here are some quotes related the design criteria for Erlang.

Changing code on the fly was an initial key requirement

the notion that three properties of a programming language were central to the efï¬cient operation of a concurrent language or operating system. These were:

1) the time to create a process

2) the time to perform a context switch between two different processes

3) the time to copy a message between two processes

The performance of any highly-concurrent system is dominated by these three times.

One of the earliest design decisions in Erlang was to use a form of buffering selective receive

Pipes were rejected in favor of messages

In the concurrent logic programming languages, concurrency is implicit and extremely ï¬ne-grained. By comparison Erlang has explicit concurrency (via processes) and the processes are coarse-grained.

The ï¬nal strategy we adopted after experimenting with many different strategies was to use per-process stop-and-copy GC. The idea was that if we have many thousands of small processes then the time taken to garbage collect any individual process will be small.

…

Current systems run with tens to hundreds of thousands of processes and it seems that when you have such large numbers of processes, the effects of GC in an individual process are insigniï¬cant.

The BEAM compiler compiled Erlang programs to BEAM instructions.

On functionalness

This next series of quotes will probably make the pure functional language people shake their heads, but i think that it’s important to understand Erlang in contrast with pure functional languages.

Erlang is not a strict side-effect-free functional language but a concurrent language where what happens inside a process is described by a simple functional language.

Behaviors in Erlang can be thought of as parameterizable higher-order parallel processes.

… the status of Erlang as a fully fledged member of the functional family is dubious. Erlang programs are not referentially transparent and there is no system for static type analysis of Erlang programs. Nor is it relational language. Sequential Erlang has a pure functional subset, but nobody can force the programmer to use this subset; indeed, there are often good reasons for not using it.

An Erlang system can be thought of as a communicating network of black boxes.

In the Erlang case, the language inside the black box just happens to be a small and rather easy to use functional language, which is more or less a historical accident caused by the implementation techniques used.

History and Usage

One thing that I was looking for in the paper was more details on how long Erlang had been around (besides before Java), how big the largest programs/systems were, and so forth. Here is what I found.

This history spans a twenty-year period…

(The history starts in 1986)

The largest ever system built in Erlang was the AXD301. At the time of writing, this system has 2.6 millions lines of Erlang code.

…

The AXD301 is written using distributed Erlang. It runs on a cluster using pairs of processors and is scalable up to 16 pairs of processors.

…

In the analysis of the AXD reported in [7], the AXD used 20 supervision trees, 122 client-server models, 36 event loggers and10 ï¬nite-state machines. All of this was programmed by a team of 60 programmers.

…

As regards reliability, the AXD301 has an observed nine-nines reliability [7]—and a four-fold increase in productivity was observed for the development process [31].

The AXD 301 is circa 1998.

Perhaps the most exciting modern development is Erlang for multicore CPUs. In August 2006 the OTP group released Erlang for an SMP.

This corroborates something that David Pollak told me at the RedMonk unconference during CommunityOne, namely that SMP support in Erlang had not been there very long. Of course, Erlang was running on systems with 16 physical (pairs, no less) of processings in a distributed environment. So while the runtime might not be that mature on SMP, the overall runtime for concurrency is probably a bit more mature than that. Nonetheless, worthwhile to know the precise facts.

All in all, I found the paper to be a very worthwhile read – (and a nice change from my usual intake of blog posts and tweets). One of my pet peeves about the computer business is the lack of awareness of the history of the field. At least I’ve removed a bit of my own ignorance as relates to Erlang.

The Scala vs Erlang whirlwind

Over the last week or two there’s been a bit of commotion with various parties in the blogosphere making the case for Scala against Erlang or for Erlang against Scala. Here’s a see spot run summary of the main writers and their positions / content:

Ted Neward (1, 2) – Ted (how confusing) is in the Scala camp, and thinks that the library approach of Scala’s actor library is preferable to Erlang’s VM (BEAM). He cites managability as a major concern. He also thinks that adapting a process style model into the JVM would be easier than adding SNMP monitoring to BEAM. The length of the Barcelona project bibliography suggests otherwise, but we’ll never know unless some brave soul goes and tries to do this. Fortunately, the JDK is open source now. One has to wonder whether such a change could make its way through the JCP, though. Unfortunately for Ted, I found that many of his arguments were weakened by his lack of knowledge about Erlang.

Steve Vinoski (1, 2, 3) – Steve’s articles are more about the reliability aspects of Erlang, and he’s mostly trying to correct Ted’s facts on Erlang. He thinks that Erlang had proven its reliability chops by running for years non-stop. Given the frequency with which Java app servers need to be (or are) bounced, this doesn’t seem that incredible to me.

Patrick Logan (1, 2, 3) – Patrick piled on after Steve and has spent most of his writing trying to correct/challenge Ted’s assertions about Erlang. Patrick thinks that the conventional (i.e. JVM and CLR) runtimes will have problems implementing an Erlang style shared-nothing model, since the pre-existing libraries for those runtimes are not engineered in a shared-nothing manner.

Barry Kelly was an observer of the Neward-Vinosk-Logan discussion, and added some commentary on the impact of VM primitives on things like lift. This is a point which resonates with me, because it seems to me that both languages and language runtimes will need some work to meet the challenges of large scale multicore computing.

Yariv Sadan has done a pile of stuff in Erlang, and supplied his own summary of the differences between Scala and Erlang. There is a very informative exchange between Yariv and lift author David Pollak in the comments of this one.

That’s the short rundown. This is a very interesting problem space — before I turned into database programming language guy in graduate school, I was angling to be a concurrent programming language guy. Along the way to that I got pretty good doses of functional and logic programming, as well as actor programming. That work was in the context of people planning to build (for the day) highly concurrent computers, on the order of 1000’s of processors. Today, multicore hardware is not quite up to that level, but it is approaching it pretty quickly. If there is any force in computing that is likely to precipitate the need for a new programming ecosystem (language, runtime, libraries), I think concurrent programming is it. I also think there is just not enough experience with this problem to have a real sense of what is really going to work. Cliff Click and Brian Goetz were right when they said that we just don’t have a good programming model for this stuff. Absent a model, I don’t know how we can think that we really understand what the runtime needs to deliver.

Jython Users at Europython

I’ve been asked to moderate a Jython panel at Europython this year, and we are looking for Jython users to be represented on the panel. So if you are a Jython user and you are gong to be at Europython, please drop me a note or leave a comment.

Scala liftoff

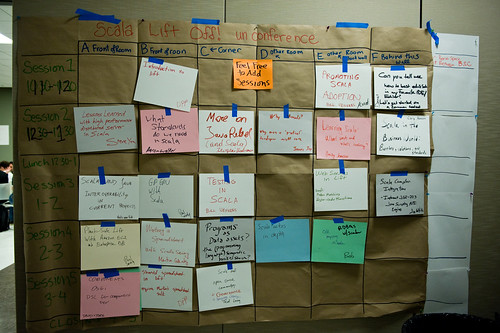

I stayed around in San Francisco for one more day after JavaOne, in order to attend the Scala liftoff. The liftoff was an open space style conference (which has a more specific meaning than “unconference”, at least to me). My friend Kaliya Hamlin did a great job of facilitating the day.

Scala has steadily been gaining attention, and hasn’t yet hit (at least in my eyes) the hype part of the classic Gartner hype cycle. I’ve been poking about with Scala, mostly because of the type inferencing, the Actor library, and lift. I have great respect for the work that Martin Odersky has done over the years, which also has me interested. Couple that with what I learned about closures in Java at JavaOne, and the list of reasons to look more deeply at Scala is getting long, especially if you are determined to have a statically typed languages.

I wasn’t able to make it to any of sessions on lift. It just worked out that other sessions overlapped them in a pathological way. While this is unfortunate, I am sure that I’ll be able to pick up anything that I need from the mailing lists and other documentation. I was able to attend two sessions on actors. One of the sessions had people with questions about actors, but no Scala actor experts were in that group. There was some discussion of Pi-calculus and the join calculus, but no discussion of the actual actor theory.

Steve Yen’s session on actor-d was pretty useful. Steve set out to build a version of memcached using Scala’s actors. He spent most of his slot talking about Scala/Java isms that he ran into – this was important since he was comparing to the C memcached. By the time he got to the actor related stuff, he was almost out of time. Steve found that he had to remove actors from the main loop of his server in order to get sufficient performance. He wanted to get statistics from the server in the background and discovered that he main loop actor was always processing messages and was never idle long enough to report statistics. He ended up replacing the actor with plain old Java Threads (POJT?). This was in addition to all the fact that he ran into many of the standard Java problems as well. I’m not sure what to conclude from this. I don’t recall what kind of hardware he was on, and I am not convinced that he had the right architecture for an actor based system. Some of his experience also seemed contrary to what the lift folks have been claiming. I think that we are in for a decent amount of investigation here. One of Martin’s statements about Scala is that it is possible (and better) to extend the language via libraries than via actual language constructs. For the most part, I agree with this, but there are certain extensions which have interactions with the runtime – like concurrency. In those cases, I don’t see how the library approach allows taking advantage of runtime features. The current version of Scala actors is implemented as a library.

One of the things that I am currently working on is support for Python in NetBeans, so I dropped into the session on IDE support for Scala. With the exception of IntelliJ, none of the IDE plugin principals were present, so it was hard to have a really productive discussion. Martin did attend the session and we talked about the possibiliy of getting hooks into the existing Scala compiler, particularly the parser and the type inferencer. That could yield some big dividends for people working on IDE support. One IDE feature that I would like to see is the ability to hit a key, and have the IDE “light up” all the inferred types, overlaid on the existing program code. This would allow developers to see if their intuition about the types actually matched that of the type inferencer. I’d like a feature like this for Python/Ruby/Groovy/Javascript code as well. Further discussion was deferred to the scala-tools mailing list.

The other session that I participated in was the session on Scala community and governance. Several people wondered about this during Kaliya’s “What questions do you have about Scala” portion of the schedule building. When nobody else put up a session in this area, I grabbed a slot, hoping to spur some conversation – if for no other reason than my own education. Fortunately, Martin had already been thinking about the problem. He is going to adopt a Python style governance, with him (and EPFL) having the final say on language design matters. There will be Scala Enhancement Proposals (SEPs), like the Python PEPs. I’m very happy with this. I think that Python has done very well at maintaining the balance between (lots) of community input on the language design, while still retaining that “quality without a name”. One of the things that I said during the CommunityOne general session panel was that particular individuals in the right place, at the right time, matter at great deal. After watching Martin for the day, and seeing his interactions on the mailing list over the last few months, I think that the design of Scala is in very good hands.

We also talked about the evolution of the Scala libraries. The Scalax project is working to build a set of utility libraries for Scala. Martin views scalax as a place where anyone can submit a library, have it tested, vetted, reworked, etc. Eventually some code in scalax would be candidates for addition to the Scala standard libraries. This also seems like a sane approach to me. I like the idea of having a place for libraries to shakeout before going into the standard libraries. Martin also mentioned a LINQ in Scala project. I need to track that one down too.

It is good to be in a multi-language world again. There’s room for Scala, Python, Ruby, and others. Another language that I am keeping my eye on is Newspeak.

JavaOne 2008: Part 2

I’ve been to so many conferences and seen so many talks that it’s hard for me to really get excited about conference presentations. I went to talks here and there, but nothing at JavaOne was really reaching out at grabbing me (in fairness, this happens at other conferences also, so it’s not just JavaOne). Or at least that was true until the last day.

Friday opened with a keynote by James Gosling, who served as the MC for a train of presenters on various cool projects.

Cool stuff

First up was Tor Norbye, who has done a lot of good work on support for editing different languages in NetBeans. Tor has been working on JavaScript support for NetBeans 6.1, and he showed off some cool features, like detecting all the exits from a function, semantic highlighting of variables, and integrated debugging between NetBeans and Firefox. All of which was cool. When I was managing the Cosmo group at OSAF, I tried a bunch of Javascript IDE’s and never really liked any of them. I haven’t done a lot with NetBeans 6.1 yet, but I will. Tor showed one feature, which was the killer one for me. NetBeans knows what Javascript will work in which browser. You can configure the IDE for the browsers that you want to support, and this affects code completion, quick fix checking and so on. Definitely useful. Here are several more references on the Javascript support in NetBeans 6.1.

The Java Platform

It’s easy for me (and others, I’d bet) to think mostly of JavaEE or perhaps JavaME when thinking about Java. That’s understandable given the worlds fixation on web applications, and looking ahead to mobile. But the majority of the talks in Gosling’s keynote session had nothing to do with Java SE, EE, or ME (at least in the phone sense).

Probably the hit (applause meter wise) of the keynote was LiveScribe‘s demonstration of their Pulse Smart Pen. This is an interesting pen that records the ink strokes that it makes, and any ambient audio that it records while the writing is happening. The ink and audio can be uploaded to a computer, as long as that computer runs Windows (apparently a Mac version is in the works). Unfortunately, the pen works by sensing marks on a special paper (that would be the razor blades), so there’s a limitation on how useful this can be. The presenter said that a future version of the software would allow people to print their own special paper, but that’s still a future item for now. By reading special marks on the special paper, you get a pretty cool user interface. The pen itself can run Java programs, and there is a developer kit available for it. If they can get by the limitation of special paper, I think that this is going to be pretty interesting.

Sentilla showed off their Mote hardware, which seem like RFID chips that can run Java programs. except that these RFID chips can form mesh networks amongst themselves and can have various kinds of sensors attached. There are lots of applications for these things, going well beyond inventory tracking and such.

Sun Distinguished Engineer Greg Bollella demonstrated Blue Wonder, which is a replacement for the computers used to control factories. Blue Wonder combines off the shelf x86 hardware, Solaris, and real time Java to provide a commodity solution for factory control applications. This is far afield of Web 2.0 applications, but just as cool, in my mind.

By the end of the keynote I was reminded of the long reach of the JVM platform, something that I’d lost sight of. The latest craze in the Web 2.0 space is location data — O’Reilly has an entire conference devoted to the topic. I think that sensor fusion of various kinds (not just location sensors) is going to play a big role in the next generation of really interesting applications. The JVM looks like it’s going to be a part of that. I don’t think than any other virtual machine technology is close in this regard.

Java’s future

I also went to a talk on Maxine, a meta-circular JVM. By the twitter reactions of the JRuby and Jython committers, I’d say that Maxine is going to get some well deserved attention when it is open sourced in June. I’m particularly interested because the PI’s for Maxine worked on PJava, and MVM. Given the differences between the Erlang VM and the JVM, I think that the ability to experiment with MVM is going to be pretty interesting. Apparently, there’s already some form of MVM support in Maxine – we’ll find out for sure in June.

During the conference I had a meeting with Cay Horstmann, and at the end of the meeting Josh Bloch saw Cay and wanted to talk to him about the BGGA closures proposal for Java. Turns out that Josh has an entire slide deck which consists of a stream of examples where BGGA does the wrong thing, generates really cryptic error messages, or requires an unbelievable amount of code. The fact that BGGA depends on generics, which are already really hard, doesn’t give me much confidence about closures in Java. If you are a statically typed language fan, I think that you ought to be worried about whether Java, the language, has any headroom left.

The last session that I went to was Cliff Click and Brian Goetz‘s session on concurrency. Unsurprisingly, the summary of the talk is “abandon all hope, ye who enter here”. I was glad to see a section in the talk about hardware support/changes for concurrency. The problem is that concurrency is going to introduce end-to-end problems, from the hardware all the way up to the application level, and I think that every stop along the way is going to be affected. Unlike sequential programming, where we are still largely reinventing the wheels of the past, there is no real previous history of research results to be mined for concurrency. Hotspot and other VM’s are close to implementing most of the tricks learned from Smalltalk and Lisp, but those systems were mostly used in a sequential fashion, and while there were experiments with concurrency, there was much less experience with the concurrent systems than the sequential ones. Big challenges ahead.

JavaOne 2008: Part 1

JavaOne is a pretty intense experience, simply by virtue of the size. If CommunityOne was twice the size of OSCON, then JavaOne is three times the size of OSCON, and it shows . There was an immediate change in feel and atmosphere once JavaOne got into full swing. You could barely move sometimes, and there were a bunch of people whose job was to corral the crowds into some semblance of order.

As a Sun employee, I was on a restricted badge, which made it hard to get into sessions (you are basically flying standby). On the other hand, I had plenty to do. I participated in a dynamic languages panel for press and analysts (who have their own track), which was pretty fun. The discussion was lively enough that we could have gone for another hour. There was one persistent fellow who really wanted there to be just one language, or wanted us to declare language X better for task Y. When I got started in computing, people learned and worked in several languages. Its only been recently that a language (Java) was popular enough that people could just learn one language, and the growth of web applications pretty much guarantees a multi-language future because of server side and client side differences. In the end, we’re back to finding and using the best tool for the job, or at least the most comfortable tool for the job. This is probably going to cause heartburn for big IT shops, but developers seem to be happy about it.

I took a walk through the Java Pavilion with Tim Bray one afternoon. He got into the AMD booth’s aromatherapy display (and yes, he has a similar shot of me doing the same thing). One of the highlights of that excursion was Tim introducing me to Dan Ingalls, who made a number of very substantial contributions to Smalltalk, including its original VM and the BitBlt graphics operation. I am a great admirer of the work that was done in Smalltalk, and it was an honor to meet Dan and have him explain the Lively Kernel to me. A short (and probably not quite fair) description of the Lively Kernel is to take the lessons learned from Smalltalk/Squeak and implement them in the browser using Javascript, AJAX, and SVG.

Unsurprisingly, I got the most value at JavaOne from the networking. And that means dinners, hallway conversations, and yes, the parties. Usually when I go to conferences, I am just a party attender. This time, I also worked at some of the parties. It was a little different to walk around the SDN party wearing a t-shirt with “SDN Event Staff” painted large on the back. I still had a good time. Between the T-shirt and the camera, I definitely had some good conversations.

Another benefit of being at a huge is company is that they can really throw a big party. Like hiring Smash Mouth to play for a private concert:

I’ve uploaded the rest of my photos from the conference to this Flickr set.

I actually do have some technical commentary, but I am going to put that into another post.

Erlang == CGI?

Jay Nelson, in the comments to Damien Katz’s Lisp as Blub:

The two relevant issues are system granularity and garbage collector behavior (if it is related to memory and garbage collection).

Erlang encourages an architecture of many small-granularity processes. To the extent that this approach is followed, failures are localized. It is possible to do this with other languages, but erlang does encourage the approach more so than other languages.

The other difference is that erlang uses a single-threaded garbage collector per process. This makes the garbage collection process simpler, more finely grained and distributed. Smaller processes mean less complicated memory structures, and thus the language encourages a simpler model with localized garbage collection failure. Determining the cause of overburdened memory usage (or any other resource because of the localized nature of small processes) becomes easier.

An erlang system can get wedged, but following the principle of many small processes makes it less likely to happen than in other languages which encourage large processes with shared memory structures.

It strikes me that this is a sort of CGI’ish view of the world (well except for the garbage collector). CGI scripts run, use (non-shared) resources, release them all and die. The entire post and comment thread is worth some pondering.