I probably should have chosen a different title for this post, because at the rate things are going for PyCon, I’ll just have to use the same title again for the next few years. This year, PyCon happened during the same week as ApacheCon EU (the 10th anniversary of the ASF), and EclipseCon. I have a slight bit of regret that I wasn’t at ApacheCon for the 10 year anniversary, but I’m planning to be at the 10th anniversary celebration at ApacheCon US in Oakland, in November. That roughly corresponds to the time when first got involved with Apache and open source, so it will be pretty meaningful. Beyond that, it was hands down for PyCon, my favorite conference. Even if the PyCon organizers hadn’t invited me to speak on a topic of my choosing, there are just so many things to love about PyCon.

The Talks

Despite a very active and fun hallway track, I did go to a number of talks.

I went to Adam Christian and Mikeal Rogers‘ talk on Windmill mostly for moral support. We worked together at OSAF, and I like Windmill, and it’s really good to see Windmill picking up steam in the Python and other communities. If you are looking for a web testing framework, particularly one that is string at AJAX applications, you owe it to yourself to look at Windmill.

There were a few tools talks that I attended. I use IPython, so I was curious to see how Reinteract: a better way to interact with Python, would improve on IPython. I like the Visicalc/TkSolver like worksheet that allows you to change values in a Python interpreter history and have values propage forward. I’d love to see all these REPL tools come together in an integrated way. We might finally get back to the functionality of the Lisp Machine REPLs someday. I also attended How AlterWay releases web applications using “zc.buildout“ since Jacob Kaplan-Moss warned me that the zc.buildout documentation was sorely lacking. Even that talk wasn’t enough to get me going, but the sprints produced some great new documentation for buildout. I’m looking forward to digging into that.

Some talks dealt directly with topics that are relevant to work, particularly now that the dynamic languages folks at Sun are now a part of the Cloud Computing division. These talks included:

-

Twisted AMQP and Thrift: Bridging Messaging and RPC for building scalable distributed applications – Twisted bridges to AMQP and Thrift.

-

Concurrency and Distributed Computing with Python Today – Jesse Noller did a great job surveying the various offerings available in Python today. There’s a lot of stuff there, but I think that there’s still quite some way to go yet. That’s not picking on Python, that’s just my general view of this space.

-

Drop ACID and think about data – Bob Ippolito did a really nice survey of the various non-relational/non-transactional data storage options out there. Bob actually tried many of these, so the survey is useful for weeding out systems aren’t really ready for prime time. A must view if you haven’t been paying attention to this space.

-

Pinax: a platform for rapidly developing websites – I’ve been following Pinax via Twitter for some time now, and James Tauber and I were involved at the beginning of the Apache XML project almost 10 years ago. Despite all that, we’ve never actually met in person until this week. James had a tough job with his talk. Pinax is very new, so he could either talk for the people who didn’t know what Pinax is, or he could talk to people wanted to know where things were. James knew this was going to be a problem and said so in his talk. And it was, at least for me. Fortunately, I managed to sit down with James at the sprints and get my questions answered. Zed Shaw recently wrote a (very positive) review of Django. That’s interesting since Zed was a hard core Rails guy. It’s also interesting because he called out Django’s emphasis on modularity and Pinax as an example of that modularity. My questions about Pinax were mostly about what (if anything) Pinax has done to build on the modularity provided by Django. At the moment, the various Pinax components cooperate mostly via conventions. Things are still early in Pinax, and I wasn’t surprised to hear this. James did say that some conventions were close to getting codified/documented/supported by the framework, which is what I am really interested in. In some ways, the data representation and modularity problems are similar to the kinds of problems that we were trying to solve for Chandler. Pinax is in the social application domain and Chandler is in the PIM domain, so while there are some similarities there are also differences. I’ll definitely be sticking my nose a bit deeper into the Pinax checkout that’s been sitting on my hard disk.

The most entertaining talk that I attended was Ian Bicking’s Topics of Interest. Ian took the invitation to speak on something of interest quite literally which created an air of mystery. In the end, Ian prepared some slides (some of which were quite thoughtful and introspective), used an instance of the new Google Moderator to queue up some audience questions, and created an IRC backchannel which he kept on the screen during his talk. The result has to be watched (and the video is already up) to be understood. It was quite hilarious, with the exception of some unpleasant commentary after someone in IRC asked “why aren’t there more women at PyCon”. The resulting IRC conversation only serves as an explanation for why. Many people felt this way, and discussion of this spilled out into Twitter, and I hope that perhaps we can change things for the better.

I gave my talk, Challenges and Opportunities for Python, and got a pretty good reception. I had a number of hallway and other conversations with people based on the content. I think that I was successful in giving people a perspective on the dynamic language world as a whole, on Python’s place in it, and some things that we might be able to do in order to grow. You can watch the video and make your own assessment, and decide if there are actions worth taking.

This year the conference is benefitting from a great new website (built in Django), and you’ll find the slides and video for each talk on the links. The video team is doing a great job of cranking out the video, so all of them should be up soon, or you can go to pycon.blip.tv to see them all together. Here are some talks that I am going to be checking out:

-

Coverage testing, the good and the bad. (And for the nth PyCon, I managed to not meet Ned Batchedler! – I miss the CrowdVine that was used last year, along with “meet the author” button that would send you to CrowdVine)

-

Behind the scenes of EveryBlock.com – plug GeoDjango

-

Paver: easy build and deployment automation for Python projects – (I didn’t meet up with Kevin Dangoor either)

The Lightning Talks

I put the lightning talks in a separate category from the talks because they are a phenomenon at PyCon. This year there were two lightning talk sessions, one at the beginning of each day and one at the end of each day. That’s 6 sessions of lightning talks! Jacob Kaplan-Moss only allowed signups for the next session, and it was truly first come first serve (without last year’s arrangement with the sponsors). There were a number of really good lightning talks. There really isn’t a good record of what got presented except perhaps on Twitter. A search for #pycon should get most of it.

Update: the lightning talks were also video’ed and will be posted on pycon.blip.tv

The Sprints

The PyCon sprints remain a phenomenon. While I don’t think quite as many people stayed this year as last year, there were still a lot of people — enough to fill the basement conference rooms at the Crowne Plaza hotel, and enough to need one of the ballrooms to serve lunch and dinner in. Once again, I hung at the Jython sprint, and wandered in and out of the Django and Pinax sprints. During the two days of sprints that I stayed for, I observed the folks working on ctypes for Jython actually crashing the JVM. SQLAlchemy started to really run on Jython and so did Twisted. Four days of hacking with the core developers of a project generally tends to produce results. So does spending time to bring new people from the community into your project.

I reported a bug in Django as I tried to get buildout setup to do Django on MySQL. I’m talking about Python and MySQL at the MySQL conference in a few weeks, so I was working on my example code. Turns out that MySQLdb doens’t build cleanly on the Mac. The trunk version almost builds cleanly, so I used that, but that version chokes something in Django. Before I discovered that I had done some gymnastics involving a git-svn clone of MySQLdb, a push of that to github, and a git recipe for buildout. I never quite got the git/buildout part working and I decided that it was overkill and that’s when I finally discovered that the trunk didn’t work with Django.

Of course, the sprints are also a time to catchup with/meet people in the community. It’s a time when there are friendly rivalries, joking, and alcohol. One of the momentous occasions during 2008, was that Django got a pony.

The exuberant Django people decided to bring the pony to PyCon…

Guido decided that he wanted the pony…

This all made for great fun and entertainment, which then spilled over into the sprints as a three way Python Core/Django/Pinax feud, which lead to things like this and this. This is hard core fun, people.

Overall Conference Commentary

The organizers estimated the attendance for this year’s PyCon at around 900 people. That’s a slight decline from last year, but the economic situation is much much worse than it was last year. I think that a 10% decline is a huge success, and a testament to the growth of interest in Python and it’s surrounding ecosystem.

From an organizational point of view, PyCon is continuing its tradition of being a mostly volunteer organized conference. It this respect it is a tour de force, at least in the space of open source conferences. PyCon is using a production company to assist, just as ApacheCon is, but the on site footprint of that company is much smaller than the on site footprint of the company for ApacheCon. Moreover, the number of volunteers helping with things is just enormous. Session chairs, runners to escort speakers from the green room to their sessions, a web site builder, lightning talk coordinator, open spaces coordinator, greeters at the conference desk, photographers, and I’m sure there are a bunch more people whose roles I didn’t even get to hear about. Absent a fancy lighted stage display for keynotes, production value wise, I feel that PyCon is operating at the same level of quality as any of the O’Reilly conferences. The program was excellent – tutorials, keynotes, invited talks, regular talks, open spaces, and lightning talks.

With PyCon, the Python community is getting way more mileage out of its face to face time than any other open source community. The combination of lightning talks, open space, and sprints creates a powerful feedback loop within the conference proper, which then extends into the sprint days. This dynamic has evolved over the years as PyCon attendees have come to understand the role of these vehicles. Here’s how it works:

The lightning talks allow anyone, regardless of stature, influence, or reputation to get in front of the entire conference. People now recognize that some of the most interesting, surprising, and entertaining moments of PyCon take place during the lightning talks. It’s a measure of the influence of the lightning talks that even the 8AM morning lightning talk sessions were well attended. At other conferences the morning sessions are reserved for keynote presentations by paying sponsors. I usually skip these because the content value is low. But I definitely got up to make sure that I hit those 8AM lightning talks. If you’ve gotten in front of the community with a lightning talk, you can extend your reach by scheduling an open space session.

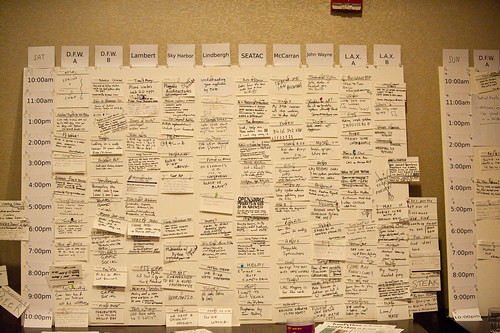

Above is a shot of the open space board for Saturday. Note that the time slots go from 10AM to 10PM. There were a few prank type sessions, but for the most part, that board really is full all day long with 10 rooms available during each one hour time slot. Consider that there were 4 ballrooms for the talks, and that the talks went from 10:20AM till 5PM. There was way more air time in the open space sessions, and people certainly made use of it. This is why PyCon is a working conference – it’s not only about transfer of information, real work gets done there.

The only tricky thing with open space is that it would be great to have electronic access to the contents of the open space board during the conference. That would help make the open spaces a first class citizen in the minds of attendees. This is an interesting problem, because part of the value of the open space is the physical board, so turning it all electronic wouldn’t be a good idea. I wonder if Kaliya Hamlin has an experience with this sort of thing.

Used well, the open space sessions are great for organizing your little (or big) slice of the world wide Python community. They are also great as a prelude to a sprint once the conference has finshed. And as I’ve already mentioned, the sprints are a great time to reinforce a project’s community as well as move it forward.

All of this notwithstanding, the PyCon organizers are not sitting on their laurels. They keep on looking for ways to improve the conference. The buckets you see above are an example of this. Instead of paper or electronic surveys, attendees were asked to vote for talks by taking a red chip and tossing it a bucket on their way out the door. Green for good, yellow for ‘meh’, and red for bad. This is way less effort than the surveys, and I observed a decent number of people putting in their chips. Doug Napoleone has more on the origins of this system, as well as a pointer to the raw data on the results.

Twitter is now in the mainstream at PyCon. Guido mentioned Twitter during his keynote, and used it to ask questions during the conference. One of James Tauber’s first slides told people which hashtag to use when covering his talk. I’d guess that I got at least 20 new followers each day of PyCon, and I think that I might even be trained to use hashtags now. #pycon was in the top 10 Twitter during the days of the conference. The takeway is that if you are going to a conference and you are not on twitter, you are missing out. The corollary is that if you are a conference, and you aren’t making use of twitter, you need to pay attention. Ian Skerrett has an interesting post on how they used Twitter during EclipseCon. One thing that was missing was a video display of the search for #pycon. I know from talking with Doug Napoleone that he has some wonderful ideas for taking all the social networking stuff to the next level. I’m really looking forward to seeing that next year.

Photography

I’ve been to a lot of conferences over the last few years, always with a camera in hand. At each conference I shoot less and less. There are now lots of people swarming around with cameras, and I feel a bit done out with shots of people speaking from the front of a room, rows of white male attendees listening to a talk, and the rest of the usual conference shots. The same thing happpend with me and liveblogging conferences. Also, it’s hard to do the hallway track and do decent photography. Last year, the PyCon organizers asked me to take some official pictures, which I was happy to do. This year they didn’t (which was fine by me), but I had planned to bring the camera anyway, because PyCon is PyCon, and photographing there is one way that I try to give back to the community.

It turns out that the organizers were way more organized about photography this year. They actually had someone to coordinate the photography for the conference. Steven Wilcox had a last minute emergency and couldn’t make it. I found out about all of this just a half an hour before I left for the airport. Steven had planned to do headshots of Pythonistas, and was planning to get studio lighting equipment and so on. All of that was now up in the air. Since I had done a bunch of headshots of ASF people at ApacheCon, I tossed some Strobist lighting gear into my suitcase, just in case. By the time I landed in O’Hare, Erich Heine had stepped up to replace Steven, and I joined the “Python Paparazzi” or “pyparazzi”, along with Erich, Jason Samsa, Dan Ryder, and Stéphane Jolicoeur-Fidelia.

Since PyCon was in Chicago last year, I was familiar with the Crowne Plaza Hotel, which is a decent hotel, but nothing to write home about. This year the conference proper moved to the Hyatt Regency down the street. PyCon has a tradition of trying to keep costs low in order to keep the conference accessible to the community, so I was expecting something like the Crowne Plaza. I couldn’t have been more wrong. The Hyatt is a photographer’s paradise. There are lots of interesting colors, textures, and some areas with beautiful overhead natural light. If you were going to photograph a wedding, you would die for settings like these for the bridal portraits.

This tiled inset in wall turned into the backdrop for James Tauber’s headshot.

It doesn’t have to be strobe(ist) to be a good headshot!

This orange lit panel behind a bench seat turned into the backdrop for Jim Baker’s headshot.

In addition to the pyparazzi, there were plenty of other cameras floating around the conference. Andy Smith decided to do a photographic project called the “Beards of Python“. When this set was announced on Flickr, it caused some Twitter buzzing amongst some of the female attendees of the conference. One thing about photographers is that we (or at least I) are always willing to take some interesting photos. So when the Twitter buzzing reached me, I offered to photograph any interested Geek Girls. James Duncan Davidson and I have discussed the value of trying to photograph female attendees at technology conferences. Since our photographs are often used for advertising, this can be a way of helping women feel more comfortable about attending — knowing that there will be other women there can be a help. So not only did I get to shoot more pictures of interesting people, I hope that in some small way this will contribute to making PyCon friendlier to women.

This is Catherine Devlin, a contributor to sqlpython. Go read her post “Five minutes at PyCon change everything” for an actual example of the lightning talk/open space/sprint scenario that I described above.

The entire set of Pythonista headshots, as well as the rest of my conference coverage are up on Flickr. Who knows what we’ll come up with for next year in Atlanta…

Travel

Regular readers will know that a trip to PyCon traditionally involves some kind of travel mishap. This year was pretty minor compared to previous years. United lost my luggage for the flight from Seattle to O’Hare, despite the fact that I arrived 2.5 hours early, and checked in at the “Premier” checkin line. I got my bag the next day, so it wasn’t really that bad. Maybe next year will be the PyCon with no travel glitches.